Steven Davis on Human Performance, High-Energy Risk, and Solace in the Graph

Steven Davis on Human Performance, High-Energy Risk, and Solace in the Graph

Steven Davis lives near Knoxville, Tennessee, close to the Smoky Mountains. It’s a peaceful setting for someone who has spent his career thinking about what happens when work goes wrong, fast.

Steven started on the front lines as a paramedic-firefighter, where you meet people on the worst day of their lives, and you see the aftermath of decisions that felt “small” at the time. Later, he moved into nuclear power, an industry built around a single, unforgiving expectation: the job must go right.

Those two worlds shaped his approach to safety today. He does not treat safety as a rulebook. He treats it as a decision system.

From Fire Trucks to Nuclear Plants: A Safety Career Built in High Consequence Environments

Steven’s early career was in municipal service as a paramedic firefighter for about six years. He then “stumbled through graduate school” at the University of Tennessee, earning a master’s degree in safety management, partly because a paid assistantship opened the right door at the right time.

From there he entered the nuclear power industry, initially in emergency planning. Once leadership noticed his safety background, he was pulled into safety roles and eventually into Human Performance, a specialized discipline focused on reducing mistakes in industries where mistakes carry huge consequences.

Human Performance taught him how to analyze events, build corrective actions, and understand the organizational “levers” that influence behavior. He later applied those principles to contractor safety and maintenance work, with results he could point to.

One example he shared highlighted a dramatic shift: at a company doing roughly 15 million hours of work per year, the organization reduced OSHA recordables from 64 to 11 by applying human performance principles to how work was planned, executed, and reviewed.

That point lands with a lot of safety professionals: major improvements often come from changing how people think and how systems align, not from adding more policies.

The Five-Element Test: Why Some Safety Programs Look Busy and Still Go Nowhere

Steven says you can break a safety program into five elements, and the more aligned they are, the safer the organization can become:

- Programming: What are the initiatives and what are you trying to improve?

- Process: Where do those initiatives live in daily work?

- Metrics: What do you track and what are the trends?

- Communications: What are the stories and lessons learned?

- Organization: Are the right people and structures in place to support it?

He explains it through a real conversation with a customer who asked why Steven’s contractor technicians were outperforming internal teams in safety. The customer pointed to their “Stop Work Program” as evidence of commitment.

Steven’s response was a reality check: a program is only real if it’s connected to everything around it.

So, he asked questions that cut through the noise:

- Why was the stop work program created? Was it built from incident analysis, or copied from a competitor?

- Where does stop work show up in training, job planning, observations, and investigations?

- Do you know how often people actually stop working week to week?

- Do you share real examples where stopping work prevents harm, so people know what good looks like?

Their answers were mostly no. They had posters.

Steven’s conclusion is simple and sharp: posters are not a safety system. Alignment is.

Human Performance in One Sentence: Perfect Work, Imperfect Humans

Steven gives one of the clearest definitions of Human Performance you’ll hear:

Some tasks require perfection. People are inherently fallible. Human performance is how you manage that intersection.

He describes teaching leaders to identify:

- Which tasks are critical

- What error precursors are present: fatigue, time pressure, stress, confusion, assumptions

- What controls increase risk: unclear steps, weak verification, poor role clarity

He references Daniel Kahneman’s book: Thinking, Fast and Slow to explain why this matters. People operate in two modes: automatic thinking and deliberate thinking. The danger in high-risk work is staying in automatic mode when the task demands deliberate attention.

Steven’s goal is to teach crews to “think about their thinking,” then build tools that help them switch modes at the right time.

“Take Two”: A Two-Minute Pause That Changes Outcomes

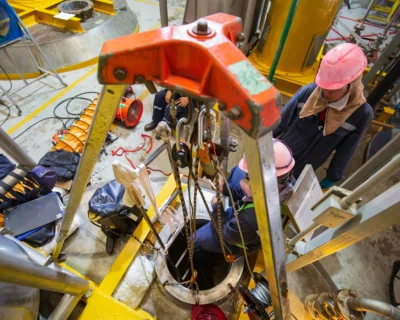

After leaving nuclear power, Steven moved into transmission and distribution work, what he calls the “poles and wires business.” That work covers vast distances, concerns multiple worksites, and carries serious hazards.

At Forbes, his team implemented a Human Performance tool called Take Two. It sounds small, but it is designed to prevent big mistakes.

The concept is simple: after the pre-job brief, crews stop for two minutes before touching the work and ask a few key questions:

- Is this what we expected?

- Does everyone know their role?

- What assumptions are we carrying into this job?

- Where are the “bites,” the pinch points where energy can hurt us: energized lines, tension, mechanical energy, mobile equipment?

Steven’s reasoning is practical: a tailboard can cover the major hazards, but it cannot capture every risk of every detail. You need a moment standing at work, looking at it, when reality replaces assumptions.

This aligns with a major principle in safety: many incidents are not caused by lack of planning, but by failing to re-check the plan when conditions shift.

Off-Normal Conditions: The Pattern Behind Many Incidents

Steven also introduced a program around Off Normal Conditions (ONC).

His observation from analyzing incidents over his career is consistent: there is often a change in the work, and teams keep going as if nothing changes.

Examples he gave will feel familiar:

- The work scope changed since the tailboard

- Weather moves in and time pressure rises

- A crew member leaves and the team loses knowledge and skill

- Delays create stress and shortcut thinking

ONC gives crews a clear trigger. When something shifts, you stop and re-evaluate assumptions. Often using Take Two as the pause.

It’s a simple idea with a big impact: change is a hazard.

The Hardest Challenge: Buy-In Across Distance, Culture, and Routine

When Steven talks about the toughest challenge in safety, he goes straight to engagement.

He can teach ideas and show videos, but until the “light bulb” goes off personally, nothing sticks. The challenge is even bigger when your workforce is spread across a vast geographic area.

Steven described work spread from northern Manitoba down to El Paso, Texas. Different sites. Different cultures. Different norms. Different levels of attention.

To increase reach, his team uses:

- a weekly safety communication called The Wire

- roughly 70 safety alerts per year, including lessons and good catches

- in-person visits to locations, because culture is not built through email alone

If there’s a theme in his approach, it’s this: repetition wins.

Advice for New Safety Pros: Learn Work as Done, Not Work as Imagined

Steven’s advice to new safety professionals is direct: spend time in the field.

He draws a clear line between:

- Work as imagined in the office

- Work as actually performed in the field

The gap between those two is where risk lives.

He even tells his own son, who wants to follow his path, to earn a journeyman lineman ticket first. Learn the craft. Learn the reality. Then learn about safety systems.

The Future: Psychology and the Shift to Serious Risk

Steven believes the next evolution of safety will come from psychology. Compliance and training matter, but high performance comes from understanding how people behave when tension rises.

He gives a small example that explains a big pattern: when the light turns yellow, most people hit the gas. That same internal clock shows up in work when delays happen. People feel time pressure and start “fixing” the problem. That’s when shortcuts appear. That’s when incidents happen.

He also highlights a major shift happening in safety measurement: moving beyond traditional recordable injury rates to focus on PSIF (potential serious injury or fatality) and SIF (serious injury or fatality)

The insight behind that shift is uncomfortable but important: recordables can drop while fatalities stay flat. Preventing cuts and bruises does not automatically prevent life-altering harm.

So, Steven focuses on high-energy hazards that truly keep leaders awake at night:

- electrical contact and arc flash

- powered mobile equipment

- suspended loads and rigging

- mechanical and stored energy

“Solace in the Graph”: The Quote That Sums Up Safety Work

Steven’s examples consistently point to the hierarchy of controls, even if he doesn’t label it that way.

Near the end of the conversation, Steven says something many safety professionals quietly feel:

You must take solace in the graph.

Because the people who never get hurt will never know they were the ones your work protected. There’s rarely a thank-you moment. The proof is in the trendline.

Fewer injuries. Fewer close calls. Fewer life-altering events.

That’s the work.